#cofacts

2023-04-01

cai

13:01:38

把相關的整理集中,沒想到還沒發展完

海報搭配奇怪的輿論

https://www.facebook.com/search/posts?q=%E7%B6%A0%E8%89%B2%E6%B5%AA%E6%BD%AE&filters=[…]wiLFxcXCJlbmRfZGF5XFxcIjpcXFwiMjAyMy0xMi0zMVxcXCJ9XCJ9In0%3D

立法院也有相關質詢

https://www.facebook.com/xiao.s.feng/posts/pfbid06tBvYMLAW6NAP2dJGvbCRj6qgmXcvNbYqM7ZqbaKUvkSszgzAkA8sdcoCcnSbbs1l

https://ivod.ly.gov.tw/Play/Clip/300K/144894 立法院VOD 不能直接跳時間軸,麻煩。

主辦自己的聲明

https://www.facebook.com/GreenSensationTW/posts/pfbid028QkuxjAKpNSK9724uwLG4U9MJYfUEoFNqR6gp68sUUw8GYg4dVNKQfBYLwWag1FLl

海報搭配奇怪的輿論

https://www.facebook.com/search/posts?q=%E7%B6%A0%E8%89%B2%E6%B5%AA%E6%BD%AE&filters=[…]wiLFxcXCJlbmRfZGF5XFxcIjpcXFwiMjAyMy0xMi0zMVxcXCJ9XCJ9In0%3D

立法院也有相關質詢

https://www.facebook.com/xiao.s.feng/posts/pfbid06tBvYMLAW6NAP2dJGvbCRj6qgmXcvNbYqM7ZqbaKUvkSszgzAkA8sdcoCcnSbbs1l

https://ivod.ly.gov.tw/Play/Clip/300K/144894 立法院VOD 不能直接跳時間軸,麻煩。

主辦自己的聲明

https://www.facebook.com/GreenSensationTW/posts/pfbid028QkuxjAKpNSK9724uwLG4U9MJYfUEoFNqR6gp68sUUw8GYg4dVNKQfBYLwWag1FLl

mrorz

23:58:28

結果關於右邊那個問圖片變成大規模停電的 AI reply

https://g0v-tw.slack.com/archives/C2PPMRQGP/p1679915874362429?thread_ts=1679887328.683419&cid=C2PPMRQGP

我查了資料庫,我送給 AI 的時候是空的,就是給這三句話:

1. 今天是2023年3月27日。你是協助讀者進行媒體識讀的小幫手。你說話時總是使用台灣繁體中文。有讀者傳了一則網路訊息給你。

2. (空字串)

3. 請問作為閱聽人,我應該注意這則訊息的哪些地方呢?請節錄訊息中需要特別留意的地方,說明為何閱聽人需要注意它,謝謝。

所以截圖裡「有消息指出,2023 台灣將有大規模停電」完全是 ChatGPT 腦補的w

https://g0v-tw.slack.com/archives/C2PPMRQGP/p1679915874362429?thread_ts=1679887328.683419&cid=C2PPMRQGP

我查了資料庫,我送給 AI 的時候是空的,就是給這三句話:

1. 今天是2023年3月27日。你是協助讀者進行媒體識讀的小幫手。你說話時總是使用台灣繁體中文。有讀者傳了一則網路訊息給你。

2. (空字串)

3. 請問作為閱聽人,我應該注意這則訊息的哪些地方呢?請節錄訊息中需要特別留意的地方,說明為何閱聽人需要注意它,謝謝。

所以截圖裡「有消息指出,2023 台灣將有大規模停電」完全是 ChatGPT 腦補的w

2023-04-02

mrorz

01:02:20

網站、chatbot 的 ChatGPT response 都已經 release 囉

mrorz

08:12:52

mrorz

08:15:25

然後是愚人節 + 創造訊息的狀況囧

https://cofacts.tw/article/1t9hir08g8he4

https://cofacts.tw/article/1t9hir08g8he4

mrorz

08:37:53

當訊息很長,查核回應就會被截斷

https://cofacts.tw/article/3vp866m6tl507

https://cofacts.tw/article/3vp866m6tl507

mrorz

11:08:37

我多一句 prompt 跟他說

資訊不足的話就說「資訊不足」好了

不知為不知

資訊不足的話就說「資訊不足」好了

不知為不知

mrorz

15:48:43

先來 revert 好了

cai

16:03:58

」

cai

16:04:57

「目前沒有已撰寫的回應,建議對其抱持健康的懷疑。」這個是以前就有的嗎?

健康的懷疑這語句感覺不順

健康的懷疑這語句感覺不順

mrorz

16:09:15

健康的懷疑是新的

mrorz

16:09:45

等等會 revert 成舊的,把 AI reply 先藏起來

mrorz

16:23:49

華語版網站已經回復成 20230318 版;英文版保留 20230402 版,這樣分析這段時間的 AI response 比較方便,因為可以直接像這樣分享 --> https://en.cofacts.tw/article/1a84s1vm1wh1x

mrorz

16:24:35

華語版 chatbot 也已經回覆

2023-04-03

mrorz

00:32:29

合理的懷疑聽起來很棒

我要表達的確實是「合理的懷疑」沒有錯

相對於陰謀論那種有點不健康的懷疑

我要表達的確實是「合理的懷疑」沒有錯

相對於陰謀論那種有點不健康的懷疑

2023-04-04

ronnywang

16:28:55

問

```今天是2023年3月27日。你是協助讀者進行媒體識讀的小幫手。你說話時總是使用台灣繁體中文。有讀者傳了一則網路訊息給你,內容會在開始和結束之間

開始

結束

請問作為閱聽人,我應該注意這則訊息的哪些地方呢?請節錄訊息中需要特別留意的地方,說明為何閱聽人需要注意它,謝謝。```

答

```謝謝您的提問。由於您的訊息內容沒有提供,因此我無法節錄其中需要特別留意的部分。鑒於網路上有許多不實、誤導性的訊息,我建議閱聽人無論是在收到任何網路訊息時都應該持懷疑態度,儘量核實其真實性,特別是在訊息內容與自己的價值觀或常識相悖時更要謹慎處理。若遇到疑似謠言或不實訊息,閱聽人也可以向權威的媒體、專業機構或相關單位查證,以確保自己取得的資訊正確可靠。```

```今天是2023年3月27日。你是協助讀者進行媒體識讀的小幫手。你說話時總是使用台灣繁體中文。有讀者傳了一則網路訊息給你,內容會在開始和結束之間

開始

結束

請問作為閱聽人,我應該注意這則訊息的哪些地方呢?請節錄訊息中需要特別留意的地方,說明為何閱聽人需要注意它,謝謝。```

答

```謝謝您的提問。由於您的訊息內容沒有提供,因此我無法節錄其中需要特別留意的部分。鑒於網路上有許多不實、誤導性的訊息,我建議閱聽人無論是在收到任何網路訊息時都應該持懷疑態度,儘量核實其真實性,特別是在訊息內容與自己的價值觀或常識相悖時更要謹慎處理。若遇到疑似謠言或不實訊息,閱聽人也可以向權威的媒體、專業機構或相關單位查證,以確保自己取得的資訊正確可靠。```

mrorz

16:30:00

嗯嗯,這是使用「媒體識讀」之後他都會生的東西,滿不錯

mrorz

16:30:15

如果先把 URL replace 光的話,會變成這樣沒錯

mrorz

16:30:29

我打算如果 URL resolver 沒回傳東西,就直接不問 chatgpt

ronnywang

16:31:22

我想試試看有沒有什麼東西是極其客觀的讓他無法提出建議的語句,但是好像有點難 XD

```謝謝您的提問。根據您所提供的訊息,以下是我針對閱聽人需要注意的地方進行的節錄和說明:

訊息原文:「早上假如想要準時起床不遲到的話,你可以調鬧鐘。」

需要特別留意的地方:

這是一個普遍的建議:這個訊息的建議相當普遍,對於閱聽人來說可能缺乏實用性,因此閱聽人需要注意其實用性。

想要準時起床不遲到的話:這個建議的前提是閱聽人需要想要準時起床不遲到。因此,閱聽人需要自己根據自己的情況判斷是否有必要調整自己的起床時間。

調鬧鐘:這個建議的具體方法是調整鬧鐘的時間,但這並不是唯一的方法,閱聽人可以根據自己的情況選擇其他適合自己的方法,例如調整睡眠時間或改變生活習慣等。

總之,以上這些地方提醒閱聽人注意這個建議的實用性,自己的起床需求,以及可以根據自己的情況選擇適合的方法。```

```謝謝您的提問。根據您所提供的訊息,以下是我針對閱聽人需要注意的地方進行的節錄和說明:

訊息原文:「早上假如想要準時起床不遲到的話,你可以調鬧鐘。」

需要特別留意的地方:

這是一個普遍的建議:這個訊息的建議相當普遍,對於閱聽人來說可能缺乏實用性,因此閱聽人需要注意其實用性。

想要準時起床不遲到的話:這個建議的前提是閱聽人需要想要準時起床不遲到。因此,閱聽人需要自己根據自己的情況判斷是否有必要調整自己的起床時間。

調鬧鐘:這個建議的具體方法是調整鬧鐘的時間,但這並不是唯一的方法,閱聽人可以根據自己的情況選擇其他適合自己的方法,例如調整睡眠時間或改變生活習慣等。

總之,以上這些地方提醒閱聽人注意這個建議的實用性,自己的起床需求,以及可以根據自己的情況選擇適合的方法。```

mrorz

16:32:29

我自己是覺得他預設會 fallback 那些什麼要確保資訊可靠啊之類的

其實還滿不錯的

其實還滿不錯的

mrorz

16:32:47

已經達成了「減緩轉傳的訊息傳播速度」的功能

2023-04-05

mrorz

02:00:27

這篇文「台積電在美國的產能收益要依一定的比例抽成分紅」的出處在哪呀

https://cofacts.tw/article/3sb482olnglg2

目前看到的聯合報 3 月初的報導 https://udn.com/news/story/7240/7012194 實際來源是中國網站,不確定要換啥關鍵字

https://cofacts.tw/article/3sb482olnglg2

目前看到的聯合報 3 月初的報導 https://udn.com/news/story/7240/7012194 實際來源是中國網站,不確定要換啥關鍵字

mrorz

02:02:14

喔喔出處是 CHIPS Act

https://www.bnext.com.tw/article/74376/uschip-cour-230308?

https://www.bnext.com.tw/article/74376/uschip-cour-230308?

mrorz

02:04:53

https://www.moneydj.com/kmdj/news/newsviewer.aspx?a=f0fdc8e0-6ec1-4a84-aad0-c1580a38047d

這裡有說外媒出處

> 英國金融時報、路透社報導,美國商務部2月28日最新規定顯示,美國晶片商若從390億美元聯邦基金獲得在國內打造尖端半導體供應鏈的補助款,必須同意未來10年不得於中國擴產的條款。

這裡有說外媒出處

> 英國金融時報、路透社報導,美國商務部2月28日最新規定顯示,美國晶片商若從390億美元聯邦基金獲得在國內打造尖端半導體供應鏈的補助款,必須同意未來10年不得於中國擴產的條款。

mrorz

11:57:18

今日議程

https://g0v.hackmd.io/@cofacts/meetings/%2FEVyIDQLUTA-p1CHDTSxhjA

想問大家今天有沒有想要提早到 6pm 之類的呢?早開完早下課 (?)

https://g0v.hackmd.io/@cofacts/meetings/%2FEVyIDQLUTA-p1CHDTSxhjA

想問大家今天有沒有想要提早到 6pm 之類的呢?早開完早下課 (?)

nonumpa

12:27:19

我要 6.30pm 才有空

mrorz

12:28:09

好唷那約個 6:30 大家覺得如何

mrorz

12:28:18

還是要 7pm

nonumpa

12:30:59

都行

mrorz

12:31:29

18:30 我覺得滿好的

感謝感謝

感謝感謝

mrorz

12:35:10

頭像顏色、選擇、名字都可以改唷

只有 “from xxx” 後綴是固定不能改的

只有 “from xxx” 後綴是固定不能改的

cai

14:13:36

文件沒開權限XD

mrorz

20:13:30

Fixed!

技術細節:在設定 http://cofacts.g0v.tw --> https://cofacts.tw 的導向的時候,沒有注意到 letsencrypt 會去存取 http://cofacts.g0v.tw/.well-known 下的東西的問題,因此修改 nginx 設定檔讓 .well-known 可以正常運作

技術細節:在設定 http://cofacts.g0v.tw --> https://cofacts.tw 的導向的時候,沒有注意到 letsencrypt 會去存取 http://cofacts.g0v.tw/.well-known 下的東西的問題,因此修改 nginx 設定檔讓 .well-known 可以正常運作

2023-04-06

mrorz

01:02:51

感謝提供,該來把這些 URL 更新了

mrorz

08:49:11

Test discord link

mrorz

13:32:32

這個有點熱門,不知道搭配的文案是什麼

https://cofacts.tw/article/3fn4T4cBn6k8q-JUpI8S

https://cofacts.tw/article/3fn4T4cBn6k8q-JUpI8S

mrorz

13:47:34

cai

19:04:18

這個蠻多篇的

mrorz

22:12:04

10m47s 後

看起來是剛下飛機 -- 在台上唱國歌 -- 走紅毯的時候的事情

在這期間其實都是紅毯 ._.

https://www.youtube.com/live/EuOLgmfzOVA?feature=share

看起來是剛下飛機 -- 在台上唱國歌 -- 走紅毯的時候的事情

在這期間其實都是紅毯 ._.

https://www.youtube.com/live/EuOLgmfzOVA?feature=share

mrorz

22:15:23

其實蔡英文是有舉右手回禮的,只是沒有停下來

mrorz

22:34:41

台灣接待外賓時的版本:https://www.president.gov.tw/Page/112

鳴放禮砲及奏敬禮樂章後奏兩國國歌,先奏來訪國國歌,續奏中華民國國歌。自儀隊敬禮至國歌奏畢,兩國元首均行扶手禮。

國歌奏畢,總統陪同友邦元首由儀隊隊長前導徒步檢閱三軍樂、儀隊。

鳴放禮砲及奏敬禮樂章後奏兩國國歌,先奏來訪國國歌,續奏中華民國國歌。自儀隊敬禮至國歌奏畢,兩國元首均行扶手禮。

國歌奏畢,總統陪同友邦元首由儀隊隊長前導徒步檢閱三軍樂、儀隊。

cai

23:20:47

cai

23:24:41

這篇很難寫

謠言如果沒了解錯,應該是直接剪那段,吵總統兩位沒有走路平行,從台視的影片看應該有平行,但是你要解釋被剪出的那段也不知道要如何說比較好

謠言如果沒了解錯,應該是直接剪那段,吵總統兩位沒有走路平行,從台視的影片看應該有平行,但是你要解釋被剪出的那段也不知道要如何說比較好

mrorz

23:43:04

mrorz

23:48:07

mrorz

23:50:28

2023-04-07

mrorz

00:07:00

我大概知道怎麼回了

• 軍禮閱兵時,兩國元首通常是並肩行走,各國行禮方式可能不同。

• 馬克宏接受中國軍禮,在閱兵前先行頷首禮,再回應注目禮,全程並沒有停下。

• 蔡英文接受瓜地馬拉軍禮、閱兵時亦有行扶手禮。

TVBS 的鏡頭滿近的

https://youtu.be/HQuCdWaa2bA?t=1654

看起來是瓜國總統說想停下來敬禮,有伸手拉到,但似乎沒有到網傳訊息說得那麼誇張

• 軍禮閱兵時,兩國元首通常是並肩行走,各國行禮方式可能不同。

• 馬克宏接受中國軍禮,在閱兵前先行頷首禮,再回應注目禮,全程並沒有停下。

• 蔡英文接受瓜地馬拉軍禮、閱兵時亦有行扶手禮。

TVBS 的鏡頭滿近的

https://youtu.be/HQuCdWaa2bA?t=1654

看起來是瓜國總統說想停下來敬禮,有伸手拉到,但似乎沒有到網傳訊息說得那麼誇張

mrorz

00:14:12

馬英九時期也是扶手禮

https://www.president.gov.tw/NEWS/13293

https://www.president.gov.tw/NEWS/13293

mrorz

00:56:35

回完了大家看一下覺得是否 OK

cai

22:36:05

https://cofacts.tw/article/352fgg6gci7f2

原文是「如果已經下載了怎麼辦」

chatgpt:

> 以下是節錄的訊息內容:

> 「最近有一個新的病毒叫做“哈哈病毒”,它會在你的手機上自動下載一個名為“綠色框框”的應用程式,然後竊取你的個人信息。」

為什麼chatgpt 分析的跟原文好像對不起來?

原文是「如果已經下載了怎麼辦」

chatgpt:

> 以下是節錄的訊息內容:

> 「最近有一個新的病毒叫做“哈哈病毒”,它會在你的手機上自動下載一個名為“綠色框框”的應用程式,然後竊取你的個人信息。」

為什麼chatgpt 分析的跟原文好像對不起來?

mrorz

22:57:11

他又腦補了 wwwwww

2023-04-08

mrorz

10:56:26

比較 prompt 的時候 temperature 拉到 0.1、n=3 好像還是比較能確認 if this prompt works with slight variant of a message

實際使用的 temperature=0 那份常常也會在 n=3 的其中一個裡面

實際使用的 temperature=0 那份常常也會在 n=3 的其中一個裡面

gary96302000.eecs96

18:04:55

嗨 @mrorz 剛好看到這裡有被 GPT 掰東西 suffer 的問題,我這邊有一些經驗可以分享:

1. temp 設的越低的方向是對的,我的使用是直接給他0

2. 目前3.5 這版本 doc 有說明,prompt 寫在system 控制的效果不理想,我測試起來也是這樣的感想,建議角色代入的部分就直接移到user 那邊要他做,反正使用的token count 是一起算的

3. 如果要他驗證給予的內容,chain of thought 的方式可以大幅增加robustness ,要他自己說明過程,順便回答自己說的是不是正確的

4. prompt要他只能使用給予的內容,這部分除了各種強調之外,給予內容跟指令的順序前後,也會大幅影響效果,我自己的經驗是主要第一個指令先寫,再來給予你的內容,後面再補上要限制他的n個指令,不過順序的調整就要試試看,但是順序 matters,這部分和人的直覺比較違背,也算是使用過才知道的 know-how 哈哈

以上是我這幾個月使用的心得,希望對你有幫助!

1. temp 設的越低的方向是對的,我的使用是直接給他0

2. 目前3.5 這版本 doc 有說明,prompt 寫在system 控制的效果不理想,我測試起來也是這樣的感想,建議角色代入的部分就直接移到user 那邊要他做,反正使用的token count 是一起算的

3. 如果要他驗證給予的內容,chain of thought 的方式可以大幅增加robustness ,要他自己說明過程,順便回答自己說的是不是正確的

4. prompt要他只能使用給予的內容,這部分除了各種強調之外,給予內容跟指令的順序前後,也會大幅影響效果,我自己的經驗是主要第一個指令先寫,再來給予你的內容,後面再補上要限制他的n個指令,不過順序的調整就要試試看,但是順序 matters,這部分和人的直覺比較違背,也算是使用過才知道的 know-how 哈哈

以上是我這幾個月使用的心得,希望對你有幫助!

mrorz

18:22:31

System prompt 那段很有感,我們確實主要 task 也是放在 user prompt

但因為怕指令跟網傳謠言分不出來,目前是三句話

System: 角色設定、一些日期等 metadata

User: 謠言

User: 主要要求、限制

但因為怕指令跟網傳謠言分不出來,目前是三句話

System: 角色設定、一些日期等 metadata

User: 謠言

User: 主要要求、限制

mrorz

18:23:31

關於 chain of thought 我目前還沒想到什麼好運用耶

@gary96302000.eecs96 有什麼想法嗎

@gary96302000.eecs96 有什麼想法嗎

gary96302000.eecs96

20:11:34

細節的角色設定可以寫在 user 那邊,應該會更有效果一些

chain of thought 在這邊我的理解應該是說請他推論/解釋 step by step,然後 output 出來,最後把他的 reasoning 過程,再送過去確認是否有回答到 user input 的問題,有的話才輸出想要的結果,沒有的話就回答現在的資訊沒辦法給予正確的回答之類的。

這個會有效果的原因我猜測是畢竟是 predicting next word,如果有一些前因讓他接著後果會提升說出來話的可靠性

chain of thought 在這邊我的理解應該是說請他推論/解釋 step by step,然後 output 出來,最後把他的 reasoning 過程,再送過去確認是否有回答到 user input 的問題,有的話才輸出想要的結果,沒有的話就回答現在的資訊沒辦法給予正確的回答之類的。

這個會有效果的原因我猜測是畢竟是 predicting next word,如果有一些前因讓他接著後果會提升說出來話的可靠性

gary96302000.eecs96

20:24:31

另外如果你有“送出 token 數會超過 4096 這個限制” 問題困擾,想要先算有幾個 token used 在送出 API call 前的話,可以參考這個 notebook :https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb

我自己有用也有確認過,和 API 回回來的 token 數量是一樣的

sample code 大概會長這樣:

``` for message in messages:

num_tokens += 4 # every message follows <im_start>{role/name}\n{content}<im_end>\n

for key, value in message.items():

num_tokens += count_token(value, model_engine)

if key == "name": # if there's a name, the role is omitted

num_tokens += -1 # role is always required and always 1 token

num_tokens += 2 # every reply is primed with <im_start>assistant```

```import tiktoken

def count_token(text, model_engine):

# ref: https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb

try:

encoding = tiktoken.encoding_for_model(model_engine)

except KeyError as err:

print(err)

print(f"Use default encoding model: cl100k_base")

encoding = tiktoken.get_encoding("cl100k_base")

num_tokens = len(encoding.encode(text))

return num_tokens```

我自己有用也有確認過,和 API 回回來的 token 數量是一樣的

sample code 大概會長這樣:

``` for message in messages:

num_tokens += 4 # every message follows <im_start>{role/name}\n{content}<im_end>\n

for key, value in message.items():

num_tokens += count_token(value, model_engine)

if key == "name": # if there's a name, the role is omitted

num_tokens += -1 # role is always required and always 1 token

num_tokens += 2 # every reply is primed with <im_start>assistant```

```import tiktoken

def count_token(text, model_engine):

# ref: https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb

try:

encoding = tiktoken.encoding_for_model(model_engine)

except KeyError as err:

print(err)

print(f"Use default encoding model: cl100k_base")

encoding = tiktoken.get_encoding("cl100k_base")

num_tokens = len(encoding.encode(text))

return num_tokens```

2023-04-09

pofeng (ocf)

00:31:56

chain of thought ,

想不出泛

想不出泛

pofeng (ocf)

00:36:02

但是我不太懂的是 , 我們會希望 chatGPT 對謠言要產出什麼回應 ? "分析" 這個詞對我有點抽象

mrorz

23:37:51

> 但是我不太懂的是 , 我們會希望 chatGPT 對謠言要產出什麼回應 ? "分析" 這個詞對我有點抽象

>

就是媒體識讀學習單會有的那種「分析」

>

就是媒體識讀學習單會有的那種「分析」

mrorz

23:44:07

讓使用者知道應該對那些地方產生懷疑,引導自己去 google

2023-04-11

cai

13:40:14

https://cofacts.tw/article/3gx3wofjvpt5h

為什麼投資詐騙的突然那麼多人問?

為什麼投資詐騙的突然那麼多人問?

mrorz

14:48:53

看起來是 summarize ._.

我把它加進 prompt engineering sheet 測測看好了

我把它加進 prompt engineering sheet 測測看好了

2023-04-12

mrorz

11:07:49

Articles about using LLM / ChatGPT in fact-checking

• 2022/12 https://dcorney.com/thoughts/2022/12/09/inevitable-gpt3-post.html — 與 ChatGPT 的對話紀錄文。覺得可以用來作為 NLP pipeline 的一環,但事實精確度看起來並非 ChatGPT 訓練時最佳化的目標。

• 2023/04 https://psyarxiv.com/qnjkf — 直接拿來做 true/false claim classification,約有 72% accuracy 並聲稱可以幫助標記假訊息。

◦ Evaluation dataset:politifact 上的 21,152 篇 statement + 5 種分類(true ~ pants on fire)

◦ Prompt 就這樣:“Can you fact-check a claim for me? When fact-checking use clear language such as ‘true’ and ‘false’, and avoid negations”

• 2023/02 https://www.wired.co.uk/article/fact-checkers-ai-chatgpt-misinformation 2020 年起就有人用 BERT 做 ClaimHunter

• 2022/12 https://dcorney.com/thoughts/2022/12/09/inevitable-gpt3-post.html — 與 ChatGPT 的對話紀錄文。覺得可以用來作為 NLP pipeline 的一環,但事實精確度看起來並非 ChatGPT 訓練時最佳化的目標。

• 2023/04 https://psyarxiv.com/qnjkf — 直接拿來做 true/false claim classification,約有 72% accuracy 並聲稱可以幫助標記假訊息。

◦ Evaluation dataset:politifact 上的 21,152 篇 statement + 5 種分類(true ~ pants on fire)

◦ Prompt 就這樣:“Can you fact-check a claim for me? When fact-checking use clear language such as ‘true’ and ‘false’, and avoid negations”

• 2023/02 https://www.wired.co.uk/article/fact-checkers-ai-chatgpt-misinformation 2020 年起就有人用 BERT 做 ClaimHunter

Teemo

18:11:36

【PTT 的最佳借鏡】Reddit 充斥 AI 鬧版貼文,版主哀號:已為末日做好準備 | TechOrange 科技報橘

https://buzzorange.com/techorange/2023/04/12/reddit-brace-chatgpt-spam/

https://buzzorange.com/techorange/2023/04/12/reddit-brace-chatgpt-spam/

2023-04-13

cai

17:53:45

所以他們在內鬥嗎?

cai

23:46:26

https://cofacts.tw/article/qPkfeocBn6k8q-JUp74Y

可以當下次簡報的題材

公用事業發票中獎會寄通知信

如果統一發票網站一般的找不到,請去找「雲端發票專屬獎」😆

可以當下次簡報的題材

公用事業發票中獎會寄通知信

如果統一發票網站一般的找不到,請去找「雲端發票專屬獎」😆

2023-04-14

mrorz

00:18:23

真的中獎被當詐騙 lol

mrorz

13:54:17

週三討論到的廣告案例,今天變成 CIB 協同行為了

下架公告求 review

https://github.com/cofacts/takedowns/pull/96/files?short_path=fc4502c#diff-fc4502cb508c91cb882a3a252679a9bf4db0e0834a4f9a443451aad93f5e6862

下架公告求 review

https://github.com/cofacts/takedowns/pull/96/files?short_path=fc4502c#diff-fc4502cb508c91cb882a3a252679a9bf4db0e0834a4f9a443451aad93f5e6862

mrorz

13:54:17

週三討論到的廣告案例,今天變成 CIB (協同性造假) 了

下架公告求 review

https://github.com/cofacts/takedowns/pull/96/files?short_path=fc4502c#diff-fc4502cb508c91cb882a3a252679a9bf4db0e0834a4f9a443451aad93f5e6862

下架公告求 review

https://github.com/cofacts/takedowns/pull/96/files?short_path=fc4502c#diff-fc4502cb508c91cb882a3a252679a9bf4db0e0834a4f9a443451aad93f5e6862

mrorz

18:36:54

可以自己查網路 (像是自己實作了 browsing plugin 的部分)

https://github.com/Torantulino/Auto-GPT

好像可以抄一下他怎麼產出關鍵字的

之後有空可以看一下他瀏覽頁面時怎麼從 HTML 裡面 extract 出東西給 chatgpt 讀

https://github.com/Torantulino/Auto-GPT

好像可以抄一下他怎麼產出關鍵字的

之後有空可以看一下他瀏覽頁面時怎麼從 HTML 裡面 extract 出東西給 chatgpt 讀

gary96302000.eecs96

2023-04-14 20:49:32

這個也蠻有趣的,UI 做的比較好,不用多裝 vector DB:https://agentgpt.reworkd.ai/

mrorz

2023-04-14 20:59:44

那個前端 tech stack 一整個巷子內

是前端工程師呢

是前端工程師呢

gary96302000.eecs96

2023-04-14 21:01:54

歐歐!前端的世界現在太可怕了 各種東西 難度對我來說是 S級 🤢

mrorz

2023-04-14 21:02:12

明明深度學習才難 XD

gary96302000.eecs96

2023-04-14 21:03:18

現在我以為都是ai 溝通師了 看誰跟 ChatGPT聊的比較有感覺這樣 哈哈

mrorz

2023-04-14 21:04:24

gary96302000.eecs96

2023-04-14 21:05:45

哈哈哈哈

gary96302000.eecs96

2023-04-14 21:05:57

這個好好笑 我要記下來

gary96302000.eecs96

2023-04-14 21:06:56

有時候看到這種 不知道他們是認真的 還是認真的在開玩笑

mrorz

2023-04-14 21:16:02

應該是認真的 (攤手)

mrorz

18:36:54

可以自己查網路 (像是自己實作了 browsing plugin 的部分)

https://github.com/Torantulino/Auto-GPT

好像可以抄一下他怎麼產出關鍵字的,用在週三討論到的 scenario 1 extended goal

之後有空可以看一下他瀏覽頁面時怎麼從 HTML 裡面 extract 出東西給 chatgpt 讀

https://github.com/Torantulino/Auto-GPT

好像可以抄一下他怎麼產出關鍵字的,用在週三討論到的 scenario 1 extended goal

之後有空可以看一下他瀏覽頁面時怎麼從 HTML 裡面 extract 出東西給 chatgpt 讀

- 👍3

- 1

gary96302000.eecs96

2023-04-14 20:49:32

這個也蠻有趣的,UI 做的比較好,不用多裝 vector DB:https://agentgpt.reworkd.ai/

mrorz

2023-04-14 20:59:44

那個前端 tech stack 一整個巷子內

是前端工程師呢

是前端工程師呢

gary96302000.eecs96

2023-04-14 21:01:54

歐歐!前端的世界現在太可怕了 各種東西 難度對我來說是 S級 🤢

mrorz

2023-04-14 21:02:12

明明深度學習才難 XD

gary96302000.eecs96

2023-04-14 21:03:18

現在我以為都是ai 溝通師了 看誰跟 ChatGPT聊的比較有感覺這樣 哈哈

mrorz

2023-04-14 21:04:24

gary96302000.eecs96

2023-04-14 21:05:45

哈哈哈哈

gary96302000.eecs96

2023-04-14 21:05:57

這個好好笑 我要記下來

gary96302000.eecs96

2023-04-14 21:06:56

有時候看到這種 不知道他們是認真的 還是認真的在開玩笑

mrorz

2023-04-14 21:16:02

應該是認真的 (攤手)

gary96302000.eecs96

20:49:32

這個也蠻有趣的,UI 做的比較好,不用多裝 vector DB:https://agentgpt.reworkd.ai/

mrorz

20:59:44

那個前端 tech stack 一整個巷子內

是前端工程師呢

是前端工程師呢

gary96302000.eecs96

21:01:54

歐歐!前端的世界現在太可怕了 各種東西 難度對我來說是 S級 🤢

mrorz

21:02:12

明明深度學習才難 XD

gary96302000.eecs96

21:03:18

現在我以為都是ai 溝通師了 看誰跟 ChatGPT聊的比較有感覺這樣 哈哈

mrorz

21:04:24

gary96302000.eecs96

21:05:45

哈哈哈哈

gary96302000.eecs96

21:05:57

這個好好笑 我要記下來

gary96302000.eecs96

21:06:56

有時候看到這種 不知道他們是認真的 還是認真的在開玩笑

mrorz

21:16:02

應該是認真的 (攤手)

mrorz

23:22:10

是說現在 ChatGPT 回應是不是應該可以去粉專發文了

2023-04-15

mrorz

21:54:38

花蓮殲20相關訊息

https://cofacts.tw/article/tvr14rgnso29

https://cofacts.tw/article/13lig9p9acijt

https://cofacts.tw/article/7qqwjlz7l3vz

https://cofacts.tw/article/tvr14rgnso29

https://cofacts.tw/article/13lig9p9acijt

https://cofacts.tw/article/7qqwjlz7l3vz

mrorz

2023-04-15 22:03:58

花蓮音爆過往與本次影音

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

我記得只有新聞報導各單位否認的消息,然後就有這篇謠言了

mrorz

2023-04-19 17:35:23

然後又有人直接研判是共機音爆

https://def.ltn.com.tw/article/breakingnews/4275151

https://def.ltn.com.tw/article/breakingnews/4275151

mrorz

21:54:38

花蓮殲20相關訊息

https://cofacts.tw/article/tvr14rgnso29

https://cofacts.tw/article/13lig9p9acijt

https://cofacts.tw/article/7qqwjlz7l3vz

https://cofacts.tw/article/tvr14rgnso29

https://cofacts.tw/article/13lig9p9acijt

https://cofacts.tw/article/7qqwjlz7l3vz

mrorz

2023-04-15 22:03:58

花蓮音爆過往與本次影音

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

我記得只有新聞報導各單位否認的消息,然後就有這篇謠言了

mrorz

2023-04-19 17:35:23

然後又有人直接研判是共機音爆

https://def.ltn.com.tw/article/breakingnews/4275151

https://def.ltn.com.tw/article/breakingnews/4275151

mrorz

22:03:58

花蓮音爆過往與本次影音

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

監視器時間 2023/4/10 08:40

https://youtu.be/gftt25E_JM8

2022/9/29 F16 超音速音爆的影片 https://youtu.be/l1vaOmVAKyU

2023-04-16

cai

13:20:11

我記得只有新聞報導各單位否認的消息,然後就有這篇謠言了

cai

13:20:38

台南廢棄太陽能板系列

https://cofacts.tw/article/3vmBZYcBn6k8q-JUOKaI

https://cofacts.tw/article/PvkQeIcBn6k8q-JUcbvH

https://cofacts.tw/article/3tsdeniyvxmaz

https://cofacts.tw/article/y72jhwdqd9wu

https://cofacts.tw/article/3vmBZYcBn6k8q-JUOKaI

https://cofacts.tw/article/PvkQeIcBn6k8q-JUcbvH

https://cofacts.tw/article/3tsdeniyvxmaz

https://cofacts.tw/article/y72jhwdqd9wu

https://www.newsmarket.com.tw/solar-invasion/ch06/

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

mrorz

2023-04-16 23:21:44

這個是之前台灣廢墟研究的 FB 貼文

我找一下

我找一下

mrorz

2023-04-16 23:27:02

居然刪文了……

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

mrorz

2023-04-17 00:34:54

這樣確定是上下游報導的狀況了

mrorz

2023-04-17 00:45:14

所以四份網傳訊息裡面

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

mrorz

2023-04-17 00:57:41

但上下游關注的重點確實是種電是否對農業有排擠現象,以及過去斷電的案場沒有好好回復成農地等現象,這些也是要聚焦關注的點。如果被謠言誤導成去誤會台電,對台灣農地並沒有任何幫助。

mrorz

2023-04-17 10:34:07

上下游報導是 2020 年

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

mrorz

2023-04-17 10:35:55

有點想要看設施容許可被取消的案場是不是有個列表

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

mrorz

2023-04-17 11:00:51

環境資訊中心這裡則有另一邊的聲音,覺得政府掃蕩的時候也掃到有種東西、只是種得跟計畫書不一樣的業者

https://e-info.org.tw/node/206178

https://e-info.org.tw/node/206178

mrorz

2023-04-17 11:18:17

2017 年監察院糾正

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

mrorz

2023-04-17 11:30:11

監察院調查報告 106財調0034 https://cybsbox.cy.gov.tw/CYBSBoxSSL/edoc/download/53089

mrorz

2023-04-17 12:32:14

根據地籍圖資網路便民服務系統,網傳案場是

台南市六甲區岩埤段6-2至6-35地號

台南市六甲區岩埤段6-2至6-35地號

mrorz

2023-04-17 13:23:44

cai

13:20:38

台南廢棄太陽能板系列

https://cofacts.tw/article/3vmBZYcBn6k8q-JUOKaI

https://cofacts.tw/article/PvkQeIcBn6k8q-JUcbvH

https://cofacts.tw/article/3tsdeniyvxmaz

https://cofacts.tw/article/y72jhwdqd9wu

https://cofacts.tw/article/3vmBZYcBn6k8q-JUOKaI

https://cofacts.tw/article/PvkQeIcBn6k8q-JUcbvH

https://cofacts.tw/article/3tsdeniyvxmaz

https://cofacts.tw/article/y72jhwdqd9wu

https://www.newsmarket.com.tw/solar-invasion/ch06/

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

mrorz

2023-04-16 23:21:44

這個是之前台灣廢墟研究的 FB 貼文

我找一下

我找一下

mrorz

2023-04-16 23:27:02

居然刪文了……

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

mrorz

2023-04-17 00:34:54

這樣確定是上下游報導的狀況了

mrorz

2023-04-17 00:45:14

所以四份網傳訊息裡面

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

mrorz

2023-04-17 00:57:41

但上下游關注的重點確實是種電是否對農業有排擠現象,以及過去斷電的案場沒有好好回復成農地等現象,這些也是要聚焦關注的點。如果被謠言誤導成去誤會台電,對台灣農地並沒有任何幫助。

mrorz

2023-04-17 10:34:07

上下游報導是 2020 年

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

mrorz

2023-04-17 10:35:55

有點想要看設施容許可被取消的案場是不是有個列表

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

mrorz

2023-04-17 11:00:51

環境資訊中心這裡則有另一邊的聲音,覺得政府掃蕩的時候也掃到有種東西、只是種得跟計畫書不一樣的業者

https://e-info.org.tw/node/206178

https://e-info.org.tw/node/206178

mrorz

2023-04-17 11:18:17

2017 年監察院糾正

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

mrorz

2023-04-17 11:30:11

監察院調查報告 106財調0034 https://cybsbox.cy.gov.tw/CYBSBoxSSL/edoc/download/53089

mrorz

2023-04-17 12:32:14

根據地籍圖資網路便民服務系統,網傳案場是

台南市六甲區岩埤段6-2至6-35地號

台南市六甲區岩埤段6-2至6-35地號

mrorz

2023-04-17 13:23:44

cai

13:26:20

https://www.newsmarket.com.tw/solar-invasion/ch06/

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

2020年的上下游有報導過是不合規定的,但台南市政府沒有法源可以拆

mrorz

23:21:44

這個是之前台灣廢墟研究的 FB 貼文

我找一下

我找一下

mrorz

23:27:02

居然刪文了……

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

原文下我記得還有光電案場業者解釋,跟在地的人槓上的討論

現在只看到這種後續發酵

https://m.facebook.com/story.php?story_fbid=pfbid02aJWq3YxLTRn436qHhUwZyoo1dWeVxX1qCXW6XgH9aeH8NhD9aeNBm5SfJESAxdPyl&id=665925574&mibextid=Nif5oz

2023-04-17

cai

00:13:30

我好想把血腥影片那個block掉,每次登入都要被嚇一次 😨

cai

00:13:30

我好想把血腥影片那個block掉,每次登入都要被嚇一次 😨

mrorz

00:33:02

他有被回報了

是那個開腸破肚的嗎囧

是那個開腸破肚的嗎囧

mrorz

00:34:54

這樣確定是上下游報導的狀況了

mrorz

00:45:14

所以四份網傳訊息裡面

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

https://cofacts.tw/article/3tsdeniyvxmaz

「驗收後就讓它荒廢」--> 錯誤。業者因為下方農地沒有農用事實,被地方政府勒令停止發電,台電不會付給這樣的案場錢,由業者自行吸收損失。

https://cofacts.tw/article/y72jhwdqd9wu

這個我不確定是不是這個圖耶?

另外兩個圖大概就是指出原出處,然後講背後的故事,強調因為停止發電,所以台電不會出錢這樣

cai

00:55:53

是阿,可是他詢問數很高應該是真的是謠言

mrorz

00:57:41

但上下游關注的重點確實是種電是否對農業有排擠現象,以及過去斷電的案場沒有好好回復成農地等現象,這些也是要聚焦關注的點。如果被謠言誤導成去誤會台電,對台灣農地並沒有任何幫助。

mrorz

00:58:00

哎但沒有文字

mrorz

10:34:07

上下游報導是 2020 年

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

2022 年的規定好像有要求業者恢復原狀

https://law01.tainan.gov.tw/glrsnewsout/LawContent.aspx?id=GL001564

申請人之原容許使用同意、原興辦事業計畫之核准、原開發許可、開發同意或原土地許可使用之處分經撤銷或廢止者,目的事業主管機關應分別依區域計畫法或都市計畫法限期令其變更使用、停止使用、拆除其地上物或恢復原狀。

mrorz

10:35:55

有點想要看設施容許可被取消的案場是不是有個列表

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

讓我對照到網傳照片案場

然後查查看那間廠商是不是符合上下游報導裡面的跑路廠商之一

mrorz

11:00:51

環境資訊中心這裡則有另一邊的聲音,覺得政府掃蕩的時候也掃到有種東西、只是種得跟計畫書不一樣的業者

https://e-info.org.tw/node/206178

https://e-info.org.tw/node/206178

mrorz

11:18:17

2017 年監察院糾正

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

https://www.cy.gov.tw/News_Content.aspx?n=124&sms=8912&s=8147

> 能源局辦理農業設施容許使用同意廢止後之設備登記廢止案件,僅列冊追蹤37案,除與農委會廢止清冊所列之108案數量,落差甚大外,且該37案竟能以「改善中」等理由,自105年容許使用同意遭廢止後,持續獲電費補貼逾新臺幣2,000萬元,遑論再與農委會列冊案件逐一比對,公帑虛擲不知幾何,顯見能源局未能確實列管追蹤,又怠於執法,虛擲公帑,核有疏失。經濟部未能善盡督導之責,亦難辭其咎。

看來還是有付一些「假種田、真種電」的案場錢錢,寫回應的時候要注意

mrorz

11:30:11

監察院調查報告 106財調0034 https://cybsbox.cy.gov.tw/CYBSBoxSSL/edoc/download/53089

mrorz

12:32:14

根據地籍圖資網路便民服務系統,網傳案場是

台南市六甲區岩埤段6-2至6-35地號

台南市六甲區岩埤段6-2至6-35地號

mrorz

13:23:44

2023-04-19

cai

14:05:27

Replied to a thread: 2023-02-22 13:47:00

mrorz

15:16:54

回完了,感謝回報

mrorz

15:18:57

查找的時候發現原來 3/3 也有一波

https://cofacts.tw/article/PPzDp4YBC7Q3lHuUYTol

https://cofacts.tw/article/E_zkpoYBC7Q3lHuU_Dnb

薩泰爾娛樂不做政治諷刺之後

彷彿台灣人已經忘記 satire 在幹嘛了

https://cofacts.tw/article/PPzDp4YBC7Q3lHuUYTol

https://cofacts.tw/article/E_zkpoYBC7Q3lHuU_Dnb

薩泰爾娛樂不做政治諷刺之後

彷彿台灣人已經忘記 satire 在幹嘛了

mrorz

17:35:23

然後又有人直接研判是共機音爆

https://def.ltn.com.tw/article/breakingnews/4275151

https://def.ltn.com.tw/article/breakingnews/4275151

Teemo

22:29:59

mrorz

2023-04-20 01:03:50

新聞似乎完全沒有要做衛教的意思w

mrorz

2023-04-20 01:03:58

這在 LINE 有傳嗎

印象有看到,然後又被亂標中國政治宣傳

分類我救不了了,每次按反對就有人又按

mrorz

2023-04-20 13:36:34

我先用 https://www.hpvaway.com.tw/ 回了

看 @teemocogs 覺得有沒有什麼更好的回法

看 @teemocogs 覺得有沒有什麼更好的回法

Teemo

22:29:59

- 👀1

mrorz

2023-04-20 01:03:50

新聞似乎完全沒有要做衛教的意思w

mrorz

2023-04-20 01:03:58

這在 LINE 有傳嗎

印象有看到,然後又被亂標中國政治宣傳

分類我救不了了,每次按反對就有人又按

mrorz

2023-04-20 13:36:34

我先用 https://www.hpvaway.com.tw/ 回了

看 @teemocogs 覺得有沒有什麼更好的回法

看 @teemocogs 覺得有沒有什麼更好的回法

2023-04-20

mrorz

01:03:50

新聞似乎完全沒有要做衛教的意思w

mrorz

01:03:58

這在 LINE 有傳嗎

mrorz

01:07:00

用 ChatGPT 生出媒體識讀角度的觀點給收到訊息的人

也像是讓 ChatGPT 對人下 prompt

https://www.facebook.com/iweilai0924/posts/pfbid02yDpeHN9Vw3nMewBZpDzShkMHBffvLqwxmxGASMqwPofKHzVbrBf3Y6M5yLWUBvFql

也像是讓 ChatGPT 對人下 prompt

https://www.facebook.com/iweilai0924/posts/pfbid02yDpeHN9Vw3nMewBZpDzShkMHBffvLqwxmxGASMqwPofKHzVbrBf3Y6M5yLWUBvFql

facebook.com

〈讓ChatGPT幫你下prompt〉 昨天聊到OpenAI的報告中,指出「數學家」將大幅受到chatGPT影響。但影響並非總是負面的「被取代」,也可能是如同從騎馬變成開車那樣,透過工具加速發展。 對此,陶哲軒做了最好的示範。 ▩誰是陶哲軒 陶哲軒(Terence...

- 1

mrorz

01:07:00

用 ChatGPT 生出媒體識讀角度的觀點給收到訊息的人

也像是讓 ChatGPT 對人下 prompt

https://www.facebook.com/iweilai0924/posts/pfbid02yDpeHN9Vw3nMewBZpDzShkMHBffvLqwxmxGASMqwPofKHzVbrBf3Y6M5yLWUBvFql

也像是讓 ChatGPT 對人下 prompt

https://www.facebook.com/iweilai0924/posts/pfbid02yDpeHN9Vw3nMewBZpDzShkMHBffvLqwxmxGASMqwPofKHzVbrBf3Y6M5yLWUBvFql

cai

13:13:28

印象有看到,然後又被亂標中國政治宣傳

cai

13:15:44

分類我救不了了,每次按反對就有人又按

mrorz

13:36:34

我先用 https://www.hpvaway.com.tw/ 回了

看 @teemocogs 覺得有沒有什麼更好的回法

看 @teemocogs 覺得有沒有什麼更好的回法

cai

14:11:33

Replied to a thread: 2023-03-22 17:56:42

https://cofacts.tw/article/3uj8zkufugeq5

換吵為什麼要用健保卡了

換吵為什麼要用健保卡了

mrorz

14:46:00

是王大師耶w

2023-04-21

cai

13:31:19

https://cofacts.tw/article/35kul4xlhrwy9

這篇破百了,兩件事湊一起,太難回了

這篇破百了,兩件事湊一起,太難回了

ronnywang

2023-04-21 13:34:15

他們有真的附上 PDF 文檔嗎 XD

裡面指的PDF 是天下雜誌的報導吧XD

mrorz

2023-04-21 14:15:50

怎麼覺得 4/16 這則訊息跟 3/17 那則比起來

多了更多的中國味兒

多了更多的中國味兒

mrorz

2023-04-21 14:17:48

對資安這題的漠視,直接送現成題材給對岸的感覺

ronnywang

2023-04-21 14:20:28

剛剛好奇看看 ChatGPT 能不能抓到「中國味兒」 XD

mrorz

2023-04-21 14:23:49

但 ChatGPT 自己就中國腔呀

它應該是沒辦法分辨

它應該是沒辦法分辨

ronnywang

2023-04-21 14:24:38

假如想要做隻支語警察,可能需要台灣自己訓練了

ronnywang

2023-04-21 14:25:25

你們有玩過 Claude 嗎

ronnywang

2023-04-21 14:26:36

Claude 目前官方有出 slack app ,可以在 slack 上免費使用,我在其他 workspace 有玩,目前覺得他效果也不錯,缺點是廢話比 ChatGPT 多 XD

mrorz

2023-04-21 15:40:08

沒玩過 Claude 耶

兩種都沒玩過~

gary96302000.eecs96

2023-04-21 17:23:29

繁體好像沒有對話的資料集?如果有夠多的話我也想拿來 fine tune 在 Llama 上面看看 ㄎ ㄎ

gary96302000.eecs96

2023-04-21 17:26:22

現在對岸蠻多團隊在搞中國版的 ChatGPT 就是了ㄎ 因為要翻牆用 ChatGPT 對他們來說不是很方便

圖書館有就稍微翻,天下用了七頁寫,還列出圖表哪個單位該做沒做,再加兩個國外案例XDD

cai

13:31:19

https://cofacts.tw/article/35kul4xlhrwy9

這篇破百了,把接詐騙電話要回不對跟天下雜誌的戶役政外洩報導兩件事湊一起,好難回

這篇破百了,把接詐騙電話要回不對跟天下雜誌的戶役政外洩報導兩件事湊一起,好難回

ronnywang

2023-04-21 13:34:15

他們有真的附上 PDF 文檔嗎 XD

裡面指的PDF 是天下雜誌的報導吧XD

mrorz

2023-04-21 14:15:50

怎麼覺得 4/16 這則訊息跟 3/17 那則比起來

多了更多的中國味兒

多了更多的中國味兒

mrorz

2023-04-21 14:17:48

對資安這題的漠視,直接送現成題材給對岸的感覺

ronnywang

2023-04-21 14:20:28

剛剛好奇看看 ChatGPT 能不能抓到「中國味兒」 XD

mrorz

2023-04-21 14:23:49

但 ChatGPT 自己就中國腔呀

它應該是沒辦法分辨

它應該是沒辦法分辨

ronnywang

2023-04-21 14:24:38

假如想要做隻支語警察,可能需要台灣自己訓練了

ronnywang

2023-04-21 14:25:25

你們有玩過 Claude 嗎

ronnywang

2023-04-21 14:26:36

Claude 目前官方有出 slack app ,可以在 slack 上免費使用,我在其他 workspace 有玩,目前覺得他效果也不錯,缺點是廢話比 ChatGPT 多 XD

mrorz

2023-04-21 15:40:08

沒玩過 Claude 耶

兩種都沒玩過~

gary96302000.eecs96

2023-04-21 17:23:29

繁體好像沒有對話的資料集?如果有夠多的話我也想拿來 fine tune 在 Llama 上面看看 ㄎ ㄎ

gary96302000.eecs96

2023-04-21 17:26:22

現在對岸蠻多團隊在搞中國版的 ChatGPT 就是了ㄎ 因為要翻牆用 ChatGPT 對他們來說不是很方便

圖書館有就稍微翻,天下用了七頁寫,還列出圖表哪個單位該做沒做,再加兩個國外案例XDD

ronnywang

13:34:15

他們有真的附上 PDF 文檔嗎 XD

cai

13:46:37

裡面指的PDF 是天下雜誌的報導吧XD

mrorz

14:15:50

怎麼覺得 4/16 這則訊息跟 3/17 那則比起來

多了更多的中國味兒

多了更多的中國味兒

mrorz

14:17:48

對資安這題的漠視,直接送現成題材給對岸的感覺

ronnywang

14:20:28

剛剛好奇看看 ChatGPT 能不能抓到「中國味兒」 XD

mrorz

14:23:49

但 ChatGPT 自己就中國腔呀

它應該是沒辦法分辨

它應該是沒辦法分辨

ronnywang

14:24:38

假如想要做隻支語警察,可能需要台灣自己訓練了

ronnywang

14:25:25

你們有玩過 Claude 嗎

ronnywang

14:26:36

Claude 目前官方有出 slack app ,可以在 slack 上免費使用,我在其他 workspace 有玩,目前覺得他效果也不錯,缺點是廢話比 ChatGPT 多 XD

mrorz

15:40:08

沒玩過 Claude 耶

cai

17:01:07

兩種都沒玩過~

cai

17:02:43

看來下午的熱門是7月台南老人健保補助XDD

cai

17:02:43

看來下午的熱門是7月台南老人健保補助XDD

gary96302000.eecs96

17:23:29

繁體好像沒有對話的資料集?如果有夠多的話我也想拿來 fine tune 在 Llama 上面看看 ㄎ ㄎ

gary96302000.eecs96

17:26:22

現在對岸蠻多團隊在搞中國版的 ChatGPT 就是了ㄎ 因為要翻牆用 ChatGPT 對他們來說不是很方便

cai

21:31:40

DC同步又掛了

圖片放太多好像也不行,看到 `<system> file image.png too big to download (3572233 > allowed size: 1000000)`

圖片放太多好像也不行,看到 `<system> file image.png too big to download (3572233 > allowed size: 1000000)`

可是這則有傳過去 😮

mrorz

2023-04-22 13:23:34

應該是活的

cai

21:31:40

DC同步又掛了

圖片放太多好像也不行,看到 `<system> file image.png too big to download (3572233 > allowed size: 1000000)`

圖片放太多好像也不行,看到 `<system> file image.png too big to download (3572233 > allowed size: 1000000)`

可是這則有傳過去 😮

mrorz

2023-04-22 13:23:34

應該是活的

cai

21:32:45

可是這則有傳過去 😮

2023-04-22

cai

00:00:57

@null

02:31:17

ping

mrorz

02:31:34

pong

mrorz

02:31:34

pong

mrorz

13:23:34

應該是活的

浩倫洪

17:21:09

@dillen99999999 has joined the channel

cai

17:34:14

圖書館有就稍微翻,天下用了七頁寫,還列出圖表哪個單位該做沒做,再加兩個國外案例XDD

2023-04-23

cai

00:25:06

太陽風暴系列,為什麼這種會那麼多人問

https://cofacts.tw/article/bgt3jgdxg2wx

https://cofacts.tw/article/4sfobxvy5bgq

https://cofacts.tw/article/30fkx5ub9gthu

https://cofacts.tw/article/387opdl0qviqy

https://cofacts.tw/article/y97hwgdo1joh

https://cofacts.tw/article/3175rcyxfwtl8

https://cofacts.tw/article/8_lEqYcBn6k8q-JU-_Ln

https://cofacts.tw/article/bgt3jgdxg2wx

https://cofacts.tw/article/4sfobxvy5bgq

https://cofacts.tw/article/30fkx5ub9gthu

https://cofacts.tw/article/387opdl0qviqy

https://cofacts.tw/article/y97hwgdo1joh

https://cofacts.tw/article/3175rcyxfwtl8

https://cofacts.tw/article/8_lEqYcBn6k8q-JU-_Ln

mrorz

2023-04-24 00:03:35

4/23~4/24 預報

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

cai

00:25:06

太陽風暴系列,為什麼這種會那麼多人問

https://cofacts.tw/article/bgt3jgdxg2wx

https://cofacts.tw/article/4sfobxvy5bgq

https://cofacts.tw/article/30fkx5ub9gthu

https://cofacts.tw/article/387opdl0qviqy

https://cofacts.tw/article/y97hwgdo1joh

https://cofacts.tw/article/3175rcyxfwtl8

https://cofacts.tw/article/8_lEqYcBn6k8q-JU-_Ln

https://cofacts.tw/article/bgt3jgdxg2wx

https://cofacts.tw/article/4sfobxvy5bgq

https://cofacts.tw/article/30fkx5ub9gthu

https://cofacts.tw/article/387opdl0qviqy

https://cofacts.tw/article/y97hwgdo1joh

https://cofacts.tw/article/3175rcyxfwtl8

https://cofacts.tw/article/8_lEqYcBn6k8q-JU-_Ln

mrorz

2023-04-24 00:03:35

4/23~4/24 預報

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

mrorz

23:45:23

OMG 這位 youtuber 這麼有名了還有必要這樣衝點閱嗎⋯⋯

2023-04-24

mrorz

00:03:35

4/23~4/24 預報

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

G1-G2 (Minor-Moderate) Geomagnetic Storm

https://www.swpc.noaa.gov/news/g1-g2-minor-moderate-geomagnetic-storm-watches-23-24-april-2023

Scale

https://www.swpc.noaa.gov/noaa-scales-explanation

2023-04-25

mrorz

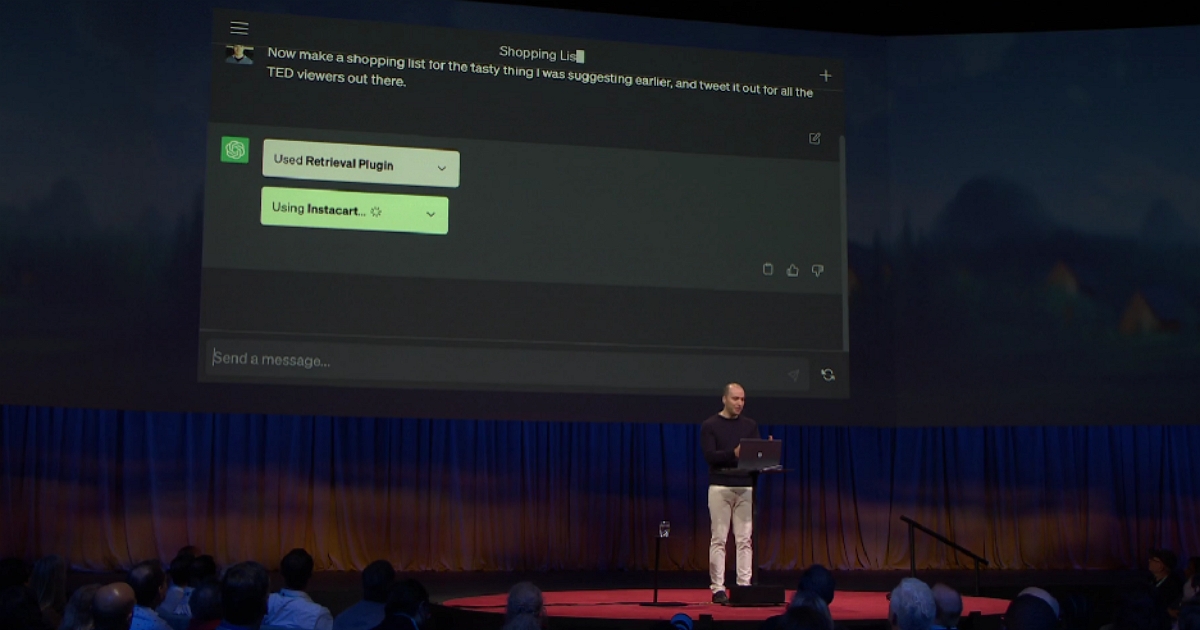

08:48:25

想要 browsing plugin

https://www.techbang.com/posts/105715-openai-shows-the-official-version-of-autogpt-live-creates#mceTableOfContent_1gup29p5c0

https://www.techbang.com/posts/105715-openai-shows-the-official-version-of-autogpt-live-creates#mceTableOfContent_1gup29p5c0

想到以前的新聞小幫手

想到以前的新聞小幫手

mrorz

2023-04-25 23:57:27

Oh 我是說 chatgpt 可以查網路的 plugin

不是指 browser extension XD

不是指 browser extension XD

mrorz

2023-05-02 16:01:13

LaMDA 的 groundness 作法:

LaMDA 先生答案

LaMDA-Research 問問題

Toolset (TS) 去找資料 extract 資訊回來

https://arxiv.org/pdf/2201.08239.pdf

https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html#:~:text=of%20the%20dialog.-,Factual%20Grounding,-While%20people%20are

LaMDA 先生答案

LaMDA-Research 問問題

Toolset (TS) 去找資料 extract 資訊回來

https://arxiv.org/pdf/2201.08239.pdf

https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html#:~:text=of%20the%20dialog.-,Factual%20Grounding,-While%20people%20are

mrorz

2023-05-04 21:54:04

又是 doc retrieval 來避免 hallucination

https://vectara.com/avoiding-hallucinations-in-llm-powered-applications/

https://vectara.com/avoiding-hallucinations-in-llm-powered-applications/

mrorz

08:48:25

想要 browsing plugin

https://www.techbang.com/posts/105715-openai-shows-the-official-version-of-autogpt-live-creates#mceTableOfContent_1gup29p5c0

https://www.techbang.com/posts/105715-openai-shows-the-official-version-of-autogpt-live-creates#mceTableOfContent_1gup29p5c0

T客邦

就在 AutoGPT 成為最近最熱門的ChatGPT應用服務之際,OpenAI 也放出重磅炸彈,ChatGPT 即將上線的新功能「Auto」。

想到以前的新聞小幫手

想到以前的新聞小幫手

mrorz

2023-04-25 23:57:27

Oh 我是說 chatgpt 可以查網路的 plugin

不是指 browser extension XD

不是指 browser extension XD

mrorz

2023-05-02 16:01:13

LaMDA 的 groundness 作法:

LaMDA 先生答案

LaMDA-Research 問問題

Toolset (TS) 去找資料 extract 資訊回來

https://arxiv.org/pdf/2201.08239.pdf

https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html#:~:text=of%20the%20dialog.-,Factual%20Grounding,-While%20people%20are

LaMDA 先生答案

LaMDA-Research 問問題

Toolset (TS) 去找資料 extract 資訊回來

https://arxiv.org/pdf/2201.08239.pdf

https://ai.googleblog.com/2022/01/lamda-towards-safe-grounded-and-high.html#:~:text=of%20the%20dialog.-,Factual%20Grounding,-While%20people%20are

mrorz

2023-05-04 21:54:04

又是 doc retrieval 來避免 hallucination

https://vectara.com/avoiding-hallucinations-in-llm-powered-applications/

https://vectara.com/avoiding-hallucinations-in-llm-powered-applications/

@null

08:49:16

逛到~ OpenAI 的 Discord 有開一個 #real-or-fake 的頻道,讓社群貼出一張影像,大家猜是真實攝影或是生成圖像 Discord https://discord.gg/openai https://cdn.discordapp.com/attachments/1060178087947542563/1100221959373078528/IMG_9405.png

Discord

A space for developers and enthusiasts to collaborate and share creations built with OpenAI's powerful models. | 3,280,986 members

cai

13:07:14

想到以前的新聞小幫手

mrorz

23:57:27

Oh 我是說 chatgpt 可以查網路的 plugin

不是指 browser extension XD

不是指 browser extension XD

mrorz

23:57:35

HackMD

# Cofacts 會議記錄 ## 2023 - [20230426 會議記錄](/4HTR5QCcRRqIfc3-KdgRlA) - [20230419 會議記錄](/XWqUCQi2RKyJ

2023-04-26

mrorz

20:21:38

Sorry 我在的地方停電中

mrorz

20:21:47

台電正在搶修

mrorz

20:21:47

台電正在搶修

gary96302000.eecs96

20:54:25

...

gary96302000.eecs96

20:54:25

...

2023-04-27

cai

13:37:14

網站的twitter登入掛了 Internal Server Error

fb 、 github、 google 沒這問題

mrorz

2023-04-27 17:40:25

我查了一下,原來他 term 裡有這條

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

那我要重開帳號了XDD

mrorz

2023-04-27 21:07:30

你在 Google 那些的 email 是不同的嗎 XD

喔喔 我懂了XD

cai

13:37:14

網站的twitter登入掛了 Internal Server Error

fb 、 github、 google 沒這問題

mrorz

2023-04-27 17:40:25

我查了一下,原來他 term 裡有這條

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

那我要重開帳號了XDD

mrorz

2023-04-27 21:07:30

你在 Google 那些的 email 是不同的嗎 XD

喔喔 我懂了XD

cai

13:39:26

fb 、 github、 google 沒這問題

mrorz

16:52:31

是蘭嶼的風吹斷電線桿

mrorz

16:54:05

被檢舉傳送色情內容的使用者,傳進 Cofacts 的東西

一連串都是他跟 LINE 壯陽藥(詐騙)業者的對話

最後的訊息停留在訂的藥到貨的訊息

覺得感慨 (?)

(NSFW 連結) https://cofacts.github.io/community-builder/#/editorworks?type=2&day=365&userId=j4S8C_pG8Zwe0LinOVHRbvv4CM3-msmcyGw-TSjmMbGxsOSTc&showAll=1

一連串都是他跟 LINE 壯陽藥(詐騙)業者的對話

最後的訊息停留在訂的藥到貨的訊息

覺得感慨 (?)

(NSFW 連結) https://cofacts.github.io/community-builder/#/editorworks?type=2&day=365&userId=j4S8C_pG8Zwe0LinOVHRbvv4CM3-msmcyGw-TSjmMbGxsOSTc&showAll=1

mrorz

16:54:05

被檢舉傳送色情內容的使用者,傳進 Cofacts 的東西

一連串都是他跟 LINE 賣藥業者的對話

最後的訊息停留在訂的藥到貨的訊息

覺得感慨 (?)

https://cofacts.github.io/community-builder/#/editorworks?type=2&day=365&userId=j4S8C_pG8Zwe0LinOVHRbvv4CM3-msmcyGw-TSjmMbGxsOSTc&showAll=1

一連串都是他跟 LINE 賣藥業者的對話

最後的訊息停留在訂的藥到貨的訊息

覺得感慨 (?)

https://cofacts.github.io/community-builder/#/editorworks?type=2&day=365&userId=j4S8C_pG8Zwe0LinOVHRbvv4CM3-msmcyGw-TSjmMbGxsOSTc&showAll=1

mrorz

17:40:25

Replied to a thread: 2023-04-27 13:37:14

我查了一下,原來他 term 裡有這條

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

https://developer.twitter.com/en/developer-terms/policy

> *Once someone on your service authenticates via _Sign in with Twitter_ you must clearly display their Twitter identity.* Twitter identity includes the person’s current Twitter @handle, avatar, and Twitter logo. Any display of someone’s Twitter followers on your service must clearly show that the relationship is associated with Twitter.

嗯⋯⋯用 Twitter 登入 Cofacts 的人有很想要顯示自己的 twitter handle 在 Cofacts 嗎?

沒有的話,我覺得或許拔掉 Twitter login 會是個比較好的方向⋯⋯

developer.twitter.com

Developer Policy – Twitter Developers. In addition to the Developer Agreement, this Developer Policy (“Policy”) provides rules and guidelines for developers who interact with Twitter’s ecosystem of applications, services, website, web pages and content including any content that we may make available through our other covered services set forth at <https://support.twitter.com/articles/20172501> (“Twitter Services”). Policy violations are also considered violations of the Developer Agreement. Take a look at the Definitions for the meaning of capitalized words used in this Policy. These policies may be changed from time to time without notice. Please check here for any updates.

cai

18:38:49

那我要重開帳號了XDD

mrorz

21:07:30

你在 Google 那些的 email 是不同的嗎 XD

cai

22:08:23

喔喔 我懂了XD

2023-04-29

pofeng (ocf)

00:10:52

喔喔, 這回應看起來好讚

ronnywang

09:23:21

Google Podcasts

這集又是一些不好說的事,搭配著中共again環台軍演,我們打開心房也打開天窗,從疑美論謠言聊到台灣人腦中殘缺的「戰爭之框」。 從裴洛西來台,到明年大選或甚至接下來的日子,台灣人避不開的,是中共的軍機擾台、文攻武嚇。他們會不會打?世界上少有國家要長期面對這種俄羅斯輪盤一般的自我提問。但同時,社會上有人無感、有人淡定、有人喊著反軍備反戰,也有人開始學習如何保護自己與家園。 我們邀請中研院助研究員劉文、《Cofacts真的假的》共同創辦人比鄰,我們從LINE群組裡最新的認知戰攻勢、疑美論敘事開始聊,接受聽眾投稿,看見台灣人淡定的外表之下,心裡可能的現實情況、有心人如何用錯假訊息操控著我們內心情緒。 聽聽看什麼是「戰爭之框」,因為那影響了台灣人,可能無法認知、感受、接受眼前正在發生的事,於是我們可能就無法一起做一些有效的準備、好好對抗。聽聽看一些微小的可能無聊的是,我們從那裡,怎麼建立最重要的「全民心防」。 *延伸閱讀: 民防作為一場公民運動:將「心防」視為備戰關鍵 / 劉文 時隔8個月再度圍台軍演 檢視中共武力戰術與用意 /中央社 美國不會出兵保台?台灣只是棋子?疑美論的現實與褻瀆 /梅復興 ■ 定期定額支持節目:成為午營咖啡的宅配訂閱會員 ■ 贊助: <https://bit.ly/3ETHsvR> ■ Instagram:不好意思請問一下|午營咖啡 ■ 合作:realityisbusiness@gmail.com ■ 節目剪輯製作:韁河媒體工作室 (王伯維、孫聖峰、郭子捷)

- 👍3

- 🦒1

cai

12:59:40

mrorz

2023-05-02 13:55:39

記錄一下有問題的回應:

> 這則訊息需要特別留意的地方是「罰單漲到4500了」。因為這個數字可能會讓人感到驚訝或擔心,但實際上在2023年4月,台灣的交通罰款最高只有2000元,且罰單金額通常會因違規情節而有所不同。因此,這個數字可能是誇大或錯誤的,閱聽人需要懷疑訊息的真實性,並進一步查證。此外,訊息中也沒有提供任何官方來源或證據支持,也需要引起閱聽人的注意。

我直接開大 temperature 重新生一個 AI reply 唷

> 這則訊息需要特別留意的地方是「罰單漲到4500了」。因為這個數字可能會讓人感到驚訝或擔心,但實際上在2023年4月,台灣的交通罰款最高只有2000元,且罰單金額通常會因違規情節而有所不同。因此,這個數字可能是誇大或錯誤的,閱聽人需要懷疑訊息的真實性,並進一步查證。此外,訊息中也沒有提供任何官方來源或證據支持,也需要引起閱聽人的注意。

我直接開大 temperature 重新生一個 AI reply 唷

cai

12:59:40

- 🤦1

mrorz

2023-05-02 13:55:39

記錄一下有問題的回應:

> 這則訊息需要特別留意的地方是「罰單漲到4500了」。因為這個數字可能會讓人感到驚訝或擔心,但實際上在2023年4月,台灣的交通罰款最高只有2000元,且罰單金額通常會因違規情節而有所不同。因此,這個數字可能是誇大或錯誤的,閱聽人需要懷疑訊息的真實性,並進一步查證。此外,訊息中也沒有提供任何官方來源或證據支持,也需要引起閱聽人的注意。

我直接開大 temperature 重新生一個 AI reply 唷

> 這則訊息需要特別留意的地方是「罰單漲到4500了」。因為這個數字可能會讓人感到驚訝或擔心,但實際上在2023年4月,台灣的交通罰款最高只有2000元,且罰單金額通常會因違規情節而有所不同。因此,這個數字可能是誇大或錯誤的,閱聽人需要懷疑訊息的真實性,並進一步查證。此外,訊息中也沒有提供任何官方來源或證據支持,也需要引起閱聽人的注意。

我直接開大 temperature 重新生一個 AI reply 唷